S Lab

[Sibin Mohan]

Systems Security Research Group at GWU and University of Illinois

Software-Defined Control (SDC) for Smart Manufacturing Systems

This work is supported by the National Science Foundation (NSF) CPS Frontier grant, 1544901, “Software Defined Control for Smart Manufacturing Systems.” (NSF link)

Investigators: Sibin Mohan (PI), Sayan Mitra (Co-PI)

UIUC Team Members: Chien-Ying Chen, Bin Chou Kao, Chiao Hsieh

Collaborators at University of Michgan: University of Michgan Team

Collaborators at Cornell University: Elaine Shi, Mu Zhang (currently at University of Utah), Yue Duan

A Glance at SDC:

Software-Defined Control (SDC) is a revolutionary methodology for controlling manufacturing systems that uses a global view of the entire manufacturing system, including all of the physical components (machines, robots, and parts to be processed) as well as the cyber components (logic controllers, RFID readers, and networks). As manufacturing systems become more complex and more connected, they become more susceptible to small faults that could cascade into major failures or even cyber-attacks that enter the plant, such as, through the internet. In this project, models of both the cyber and physical components will be used to predict the expected behavior of the manufacturing system. Since the components of the manufacturing system are tightly coupled in both time and space, such a temporal-physical coupling, together with high-fidelity models of the system, allows any fault or attack that changes the behavior of the system to be detected and classified. Once detected and identified, the system will compute new routes for the physical parts through the plant, thus avoiding the affected locations. These new routes will be directly downloaded to the low-level controllers that communicate with the machines and robots, and will keep production operating (albeit at a reduced level), even in the face of an otherwise catastrophic fault. These algorithms will be inspired by the successful approach of Software-Defined Networking. Anomaly detection methods will be developed that can ascertain the difference between the expected (modeled) behavior of the system and the observed behavior (from sensors). Anomalies will be detected both at short time-scales, using high-fidelity models, and longer time-scales, using machine learning and statistical-based methods. The detection and classification of anomalies, whether they be random faults or cyber-attacks, will represent a significant contribution, and enable the re-programming of the control systems (through re-routing the parts) to continue production.

Publications:

- Yassine Qamsane, Chien-Ying Chen, Efe C. Balta, Bin-Chou Kao, Sibin Mohan, James Moyne, Dawn Tilbury and Kira Barton, “A Unified Digital Twin Framework for Real-time Monitoring and Evaluation of Smart Manufacturing Systems,” in 15th IEEE International Conference on Automation Science and Engineering (CASE), Aug. 2019. (PDF)

- Mu Zhang, Chien-Ying Chen, Bin-Chou Kao, Yassine Qamsane, Yuru Shao, Yikai Lin, Elain Shi, Sibin Mohan, Kira Barton, James Moyne and Z. Morley Mao, “Towards Automated Safety Vetting of PLC Code in Real-World Plants,” in the Proceedings of the IEEE Symposium on Security & Privacy (S&P), May 2019. (PDF)

- Potok, Matthew, Chien-Ying Chen, Sayan Mitra, and Sibin Mohan. “SDCworks: a formal framework for software defined control of smart manufacturing systems.” in Proceedings of the 9th ACM/IEEE International Conference on Cyber-Physical Systems (ICCPS), pp. 88-97. IEEE Press, 2018. (PDF)

Projects:

Modeling Framework for SDC

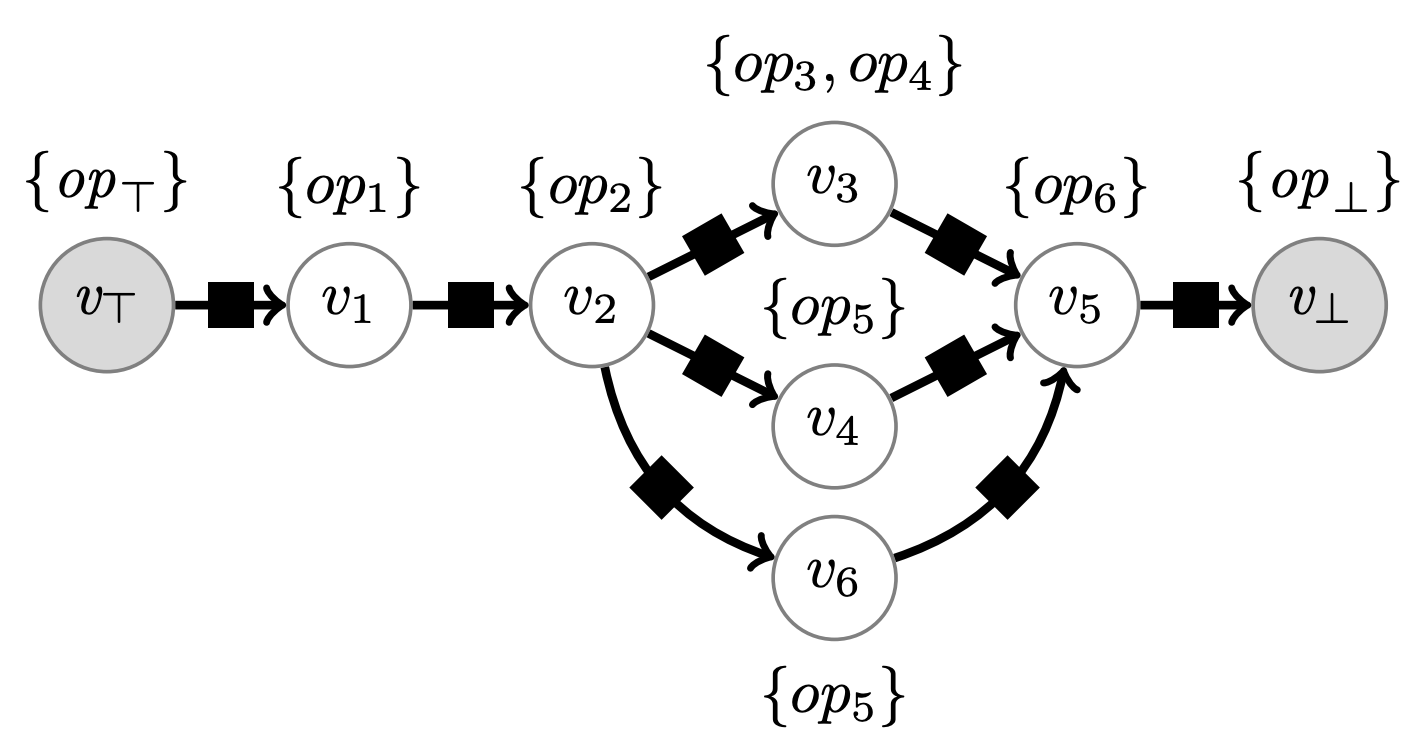

Discrete manufacturing systems are complex cyberphysical systems (CPS) and their availability, performance, and quality have a big impact on the economy. Smart manufacturing promises to improve these aspects. One key approach that is being pursued in this context is the creation of centralized software-defined control (SDC) architectures and strategies that use diverse sensors and data sources to make manufacturing more adaptive, resilient, and programmable. In this paper, we present SDCWorks—a modeling and simulation framework for SDC. It consists of the semantic structures for creating models, a baseline controller, and an open source implementation of a discrete event simulator for SDCWorks models. We provide the semantics of such a manufacturing system in terms of a discrete transition system which sets up the platform for future research in a new class of problems in formal verification, synthesis, and monitoring. We illustrate the expressive power of SDCWorks by modeling the realistic SMART manufacturing testbed of University of Michigan. We show how our open source SDCWorks simulator can be used to evaluate relevant metrics (throughput, latency, and load) for example manufacturing systems.

An example of the graph representing a linear manufacturing line modeled using SDCWorks.

Safety Vetting of PLC Code

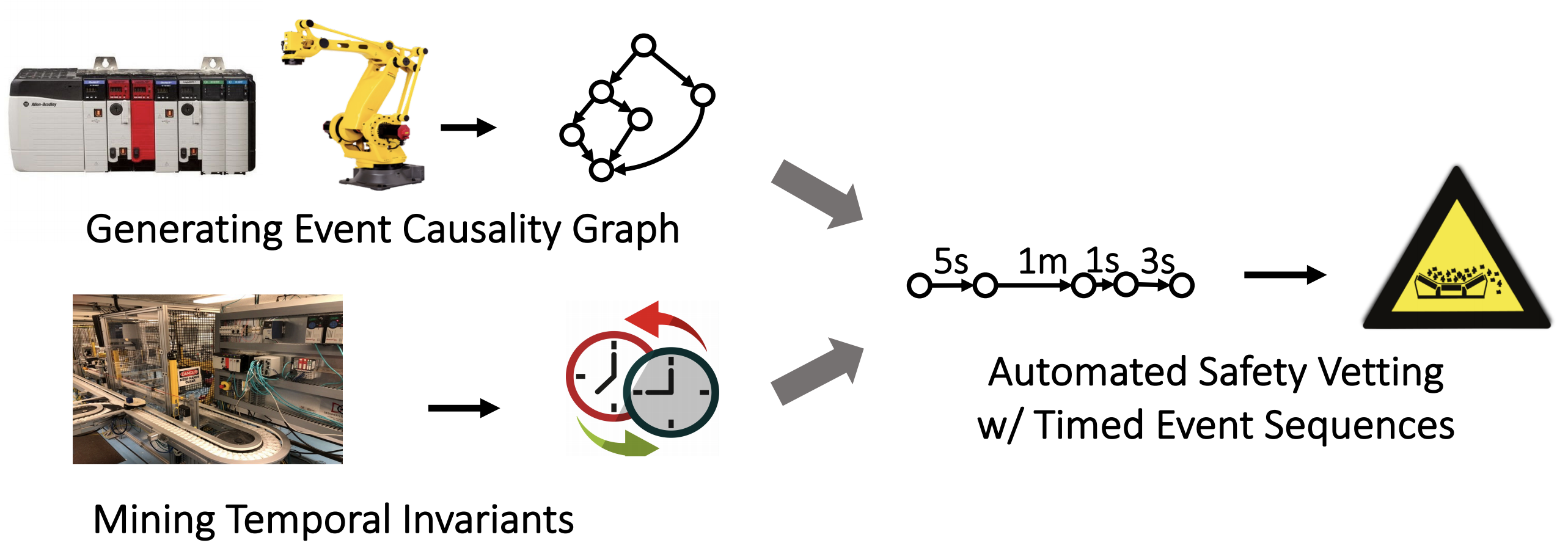

Safety violations in programmable logic controllers (PLCs), caused either by faults or attacks, have recently garnered significant attention. However, prior efforts at PLC code vetting suffer from many drawbacks. Static analyses and verification cause significant false positives and cannot reveal specific runtime contexts. Dynamic analyses and symbolic execution, on the other hand, fail due to their inability to handle real-world PLC programs that are event-driven and timing sensitive. In this paper, we propose VETPLC, a temporal context-aware, program analysisbased approach to produce timed event sequences that can be used for automatic safety vetting. To this end, we (a) perform static program analysis to create timed event causality graphs in order to understand causal relations among events in PLC code and (b) mine temporal invariants from data traces collected in Industrial Control System (ICS) testbeds to quantitatively gauge temporal dependencies that are constrained by machine operations. Our VETPLC prototype has been implemented in 15K lines of code. We evaluate it on 10 real-world scenarios from two different ICS settings. Our experiments show that VETPLC outperforms state-of-the-art techniques and can generate event sequences that can be used to automatically detect hidden safety violations.

Overview of the VetPLC system. The safety vetting consists of three major steps: (1) generating event causality graph, (2) mining temporal invariants and (3) automated safety vetting with timed event sequences.

Digital Twin Platform

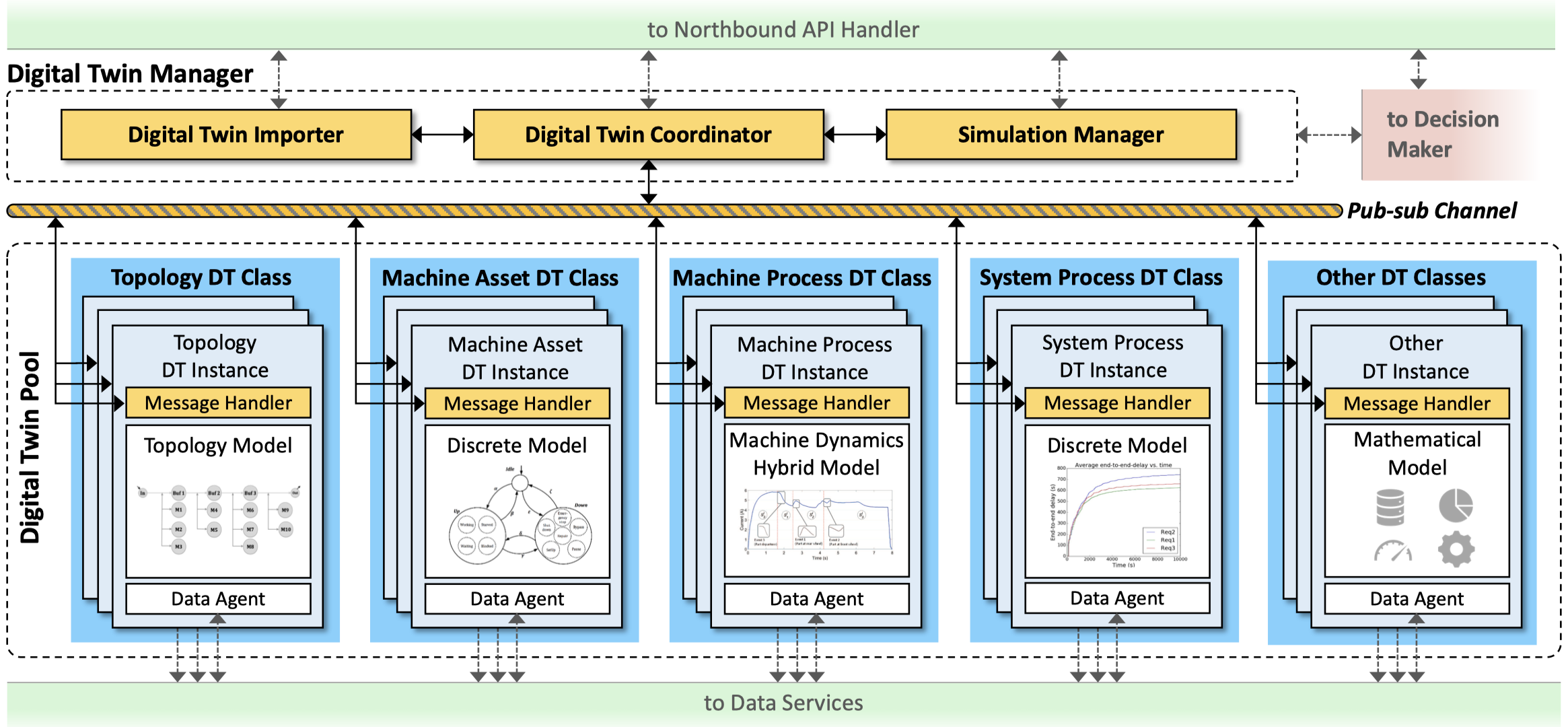

Digital Twin (DT) is one of the key enabling technologies for realizing the promise of Smart Manufacturing (SM) and Industry 4.0 to improve production systems operation. Driven by the generation and analysis of high volume data coming from interconnected cyber and physical spaces, DTs are real-time digital images of physical systems, processes or products that help evaluate and improve business performance. This paper proposes a novel DT architecture for the realtime monitoring and evaluation of large-scale SM systems. An application to a manufacturing flow-shop is presented to illustrate the usefulness of the proposed methodology.

Architecture of the digital twin (DT) platform in the SDC framework. The digital twin platform mainly consists of a DT pool that hosts the deployed DT instances and a DT manager that coordinates the communication between DT instances.

Dione: A protocol verification system built with Dafny for I/O Automata

Input/Output Automata (IOA) is an expressive and popular specification framework with built-in properties for compositional reasoning. It has been shown to be effective in specifying and analyzing distributed and networked systems. The available verification engines for IOA are all based on interactive theorem provers such as Isabelle, Larch, PVS, and Coq, and are expressive but require heavy human interaction. Motivated by the advances in SMT solvers, in this work we explore a different expressivity-automation tradeoff for IOA. We present Dione, the first IOA analysis system built with Dafny and its SMT-powered toolchain. Our translator tool converts Python-esque Dione language specifications to Dafny which enables Bounded Model Checking and k-induction-based invariant checking using Z3. States, actions, and transitions can be specified with common data types such as algebraic types, bit-vectors, and arrays, while ensuring that the resulting formulas are expressed mostly in fragments solvable by Z3 and other SMT solvers. We present successful applications of Dione in verification of Asynchronous LCR algorithm, two self-stabilizing mutual exclusion algorithms, and CAN bus Arbitration: we automatically prove key invariants of all four protocols; for the last three this involves reasoning about arbitrary number of participants. These analyses are largely automatic with minimal manual inputs needed, and they demonstrate the effectiveness of this approach in analyzing networked and distributed systems.

Semantics for synchronous algorithms over asynchronous network with synchronizers

Designing and proving correctness of distributed algorithms through asynchronous communication is notoriously difficult. More often, one would start with stronger assumptions such as the synchronous network model for design purposes. However, the algorithms would only work with environments satisfying synchrony assumptions. In this work, we revisit synchronizers that can be used to run a synchronous algorithm on top of an asynchronous network model. We aim to provide execution semantics for synchronous algorithms to run with synchronizers, and this enables users to prototype a synchronous algorithm and verify against asynchronous communication. Further, this execution model can serve as a verified golden reference for testing or runtime monitoring the correctness of, for example, an optimized asynchronous version of the algorithm.